Prior to the development of efficient audio coding schemes, midi files were the primary method of producing music on a home computer. Midi files are still used since they represent the music in symbolic form and are a common interchange format for the many music notation programs. These midi files are freely available on many websites and can be gathered using web scraping applications.

The Lakh MIDI Dataset v0.1 (colinraffel.com) is a large collection of midi files that was accumulated to match with the Million Song Dataset. This note deals with a small subset of this collection called the Lakh Clean Midi Dataset. It consists of more than 17,000 files that are organized into subfolders labeled by the artists. Like the larger set, the Lakh Clean Midi Dataset consists of mainly popular songs, including rock, country music, rhythm and blues and jazz.

In order to navigate through such a large database, a new application midiexplorer was developed. Besides allowing the user to browse and play any of the midi files, midiexplorer contains search tools for finding midi files with certain characteristics and providing a graphical preview of the files. The program has been in continuous development for the past few years, and more features can be extracted from these files and stored as meta data in a separate database.

This web page presents and describes some of the meta data that has been collected Lakh Clean Midi Dataset. The meta data is available in the form of spread sheet files and cvs files. This database is valuable for searching for midi files having specific characteristics. One of the applications of this collection was to investigate the use of certain nonsupervised learning algorithms to cluster and represent the files in a two dimensional space umapAnalysis.

Knowing the genre of the music provides insight in the nature of music encoded in the midi file. This information was gathered from Google and recorded in the csv file, (genre.tsv). Though this information is not completely accurate and may contain inconsitencies it is useful for finding midi files that have been assigned to a certain genre. the table below lists of the music genres that were identified. The numbers inside the parentheses indicate the number of midi files that were assigned to that genre. Note that future edits to genre.tsv may not be reflected in this table.

| Genre | Genre | Genre | Genre |

|---|---|---|---|

| Pop (2013) | Rock (875) | Pop rock (538) | Alternative/Indie (458) |

| Jazz (336) | R&B/Soul (307) | Soft rock (264) | Hard rock (259) |

| Country (210) | Classical (198) | Alternative rock (180) | Schlager & Volksmusik (176) |

| Rock and roll (170) | R&B (159) | Folk rock (158) | Progressive rock (151) |

| Dance/Electronic (144) | Children's music (125) | Soul music (119) | Synth-pop (112) |

| Hip-hop (107) | New age (101) | Disco (100) | Classic rock (97) |

| Metal (87) | Easy listening (83) | Reggae (83) | Folk (81) |

| Contemporary R&B (81) | Dance-pop (77) | Funk (77) | New wave music (75) |

| Heavy metal (73) | Eurodance (69) | Grunge (69) | Blues rock (65) |

| Blues (58) | Singer-songwriter (52) | Adult contemporary (51) | Electronic (49) |

| Rag (49) | Psychedelic rock (45) | Punk rock (41) | MPB (40) |

| Latin pop (38) | New wave (37) | Techno (36) | Country rock (35) |

| Glam metal (32) | Glam rock (30) | Baroque pop (30) | Baroque (29) |

| Doo-wop (28) | Folk world (26) | Rockabilly (25) | Traditional pop (25) |

| Show tune (23) | Thrash metal (23) | Industrial rock (22) | Nu metal (22) |

| Electronic pop (21) | Record label (21) | Art rock (20) | Country pop (20) |

| Electronica (20) | Folk pop (19) | Heartland rock (18) | New-age music (18) |

| Post-disco (18) | Chanson (18) | Roots rock (18) | Progressive metal (17) |

| Jazz fusion (17) | Pop punk (17) | Chanson francaise (17) | Psychedelic pop (17) |

| Soul (16) | Power pop (16) | Garage rock (16) | Instrumental rock (16) |

| Holiday (16) | Blue-eyed soul (16) | Europop (15) | Mandarin pop (15) |

| House music (15) | Musical (14) | Jazz rock (14) | Opera (14) |

| Post-grunge (12) | Traditional pop music (12) | Big band (12) | Funk soul (12) |

| Progressive pop (12) | Beat music (12) | Southern rock (12) | Funk rock (11) |

| Sertanejo (11) | Art pop (11) | Electronic rock (11) | Musica tropicale (11) |

| Dance pop (11) | Britpop (11) | Arena rock (11) | Bubblegum pop (11) |

| Dance-rock (11) | Film score (10) | Gospel (10) | Halloween (10) |

| Psychedelic soul (9) | Electropop (9) | Synth-rock (9) | Alternative metal (9) |

| Christmas music (9) | Latin rock (9) | Jazz pop (9) | Bossa nova (9) |

| Groove metal (8) | Acoustic rock (8) | Trance (8) | Russian pop (8) |

| Ska punk (8) | Classic soul (8) | Singer songwriter (8) | Songwriter (8) |

| Reggae rock (8) | Psychedelic music (8) | Sunshine pop (8) | Smooth jazz (7) |

| Pop holiday (7) | Brill Building (7) | Vocal jazz (7) | Eurodisco (7) |

| Dance (7) | House (7) | French pop (7) | Jazz funk (7) |

| Rap metal (7) | Contemporary folk (6) | Classical pop (6) | Industrial metal (6) |

| Comedy (6) | Operatic pop (6) | Gothic rock (6) | Philadelphia soul (6) |

| Ragtime (6) | Post-punk (5) | Eurohouse (5) | Jangle pop (5) |

| Pop soul (5) | Tropipop (5) | Dance/electronic (5) | French Indie (5) |

| Novelty song (5) | Experimental pop (5) | Big beat (4) | Reggae fusion (4) |

| Alternative pop (4) | Progressive soul (4) | Funk metal (4) | Bluegrass (4) |

| Progressive house (4) | Rock opera (4) | Indie rock (4) | Bolero (4) |

| Ska (4) | Contemporary Christian (4) | Bebop (4) | Christian (4) |

| Teen pop (4) | Forro (4) | Psychedelic folk (4) | Orchestral pop (4) |

| Italo pop (4) | Polka (4) | Disco funk (4) | Rock pop (4) |

| Nederpop (4) | Italo disco (4) | Skate punk (4) | Easy Listening (4) |

| March (3) | Lebanese pop (3) | New jack swing (3) | Symphonic rock (3) |

| Downtempo (3) | Classical jazz (3) | Comedy rock (3) | Funk/Soul (3) |

| Eurodance pop (3) | Wagnerian rock (3) | Country blues (3) | Instrumental (3) |

| Boogie-woogie (3) | Indian classical music (2) | Rock blues (1) | Surf music (1) |

The core properties can be found in either the csv file lakhdb.csv or the spreadsheet lakhdb.ods. They include the global properties of the midi files such as the number of tracks, the number of midi pulses per quarter note (ppqn), the maximum number of beats, and the total number of notes. The extreme values of these parameters are quite interesting and is valuable when we are designing the analysis programs.

The midi file with the longest name is Bela Bartok/Kezdok zongoramuzikaja (First Term at the Piano), BB 66_ No. 16_ Paraszttanc (Peasant's Dance)_ Allegro moderato.mid. Note that there is a comma in the file name, preventing me from using the comma as a separator in the database. Fortunately it is safe to separate the values with a tab.

Here is the list of midi files with the largest number of notes. The number following the midi file name is the actual number of notes.

1.0 Carmina Burana/O Fortuna.mid 40952

2.0 Jeffrey Reid Baker/Carmina Burana_ Fortuna Imp... 40944

3.0 Mike Oldfield/Tubular Bells (part one).mid 37317

4.0 Oldfield, Mike/Tubular Bells (Part 1).mid 37311

5.0 Peter Allen/I Go to Rio.mid 31466

6.0 Genesis/In the Cage (medley)_ The Cinema Show ... 30439

7.0 Genesis/In the Cage.mid 30437

Here is the list of midi files with the least number of notes.

1.0 Soundgarden/One Minute of Silence.mid 1

2.0 Jean Michel Jarre/Oxygene, Part 3.mid 178

3.0 Broadway/Eternal Journey.mid 193

4.0 Nine Inch Nails/Pinion.mid 196

5.0 Gilbert & Sullivan/The Mikado_ Tit Willow.mid 206

6.0 Soundgarden/667.mid 211

7.0 Nine Inch Nails/Eraser (Polite).mid 230

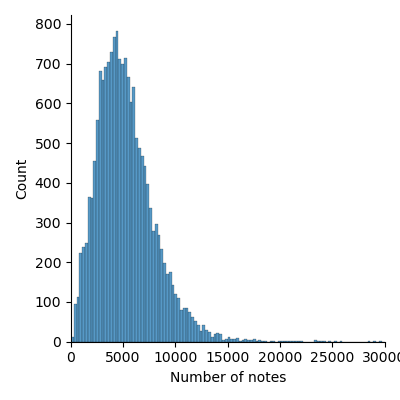

These are mainly outliers. Most of the midi files contained about 5000 notes. The histogram of the number of notes is shown here.

The notes may be distributed among many tracks or channels, so the duration of the midi

file depends on the number of beats in the longest track or channel. Here is a list of

longest midi files (largest number of beats). The number following the midi file name

is the number of beats in the longest track.

1.0 Fiorello/Lei balla sola.mid 23468

2.0 Last/Rosamunde.1.mid 22681

3.0 Pooh/Il cielo e blu sopra le nuvole.mid 11647

4.0 Weather Report/Birdland.mid 5124

5.0 Mike Oldfield/Tubular Bells (part one).mid 4333

6.0 Oldfield, Mike/Tubular Bells (Part 1).mid 4332

7.0 Led Zeppelin/Thank You.1.mid 4015

Here is the list of the shortest midi files (smallest number of beats).

1.0 Bryan Adams/Here I Am (End Title).mid 35

2.0 The Beatles/Her Majesty.mid 35

3.0 Bon Jovi/Everyday.mid 36

4.0 LeAnn Rimes/Life Goes On (MAS_H mix).mid 41

5.0 Britney Spears/Lucky.1.mid 48

6.0 Robbie Williams/Feel.mid 48

7.0 Broadway/Eternal Journey.mid 50

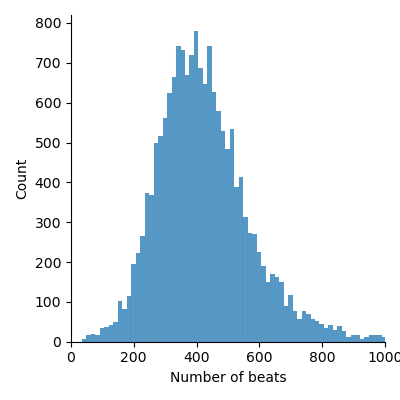

These are mainly outliers. Most files are about 500 beats longs as seen in the following histograms.

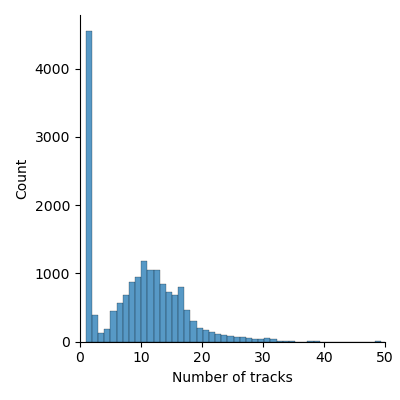

The histogram of the number of tracks in the midi files is shown here.

Many midi files were type 0 midi files, containing one track but many channels. The

number of channels can range between 1 and the maximum 16. If it is only one channel,

then the channel was usually assigned to the acoustic piano. A few files contained

130 midi tracks even though the number of active channels was less than 10. Here is the

list of files with 130 tracks.

1.0 Bertie Higgins/Key Largo.mid

2.0 Gaye,Marvin/I Heard It Through The Grapevine.1...

3.0 Gaye,Marvin/Mercy Mercy Me (The Ecology).mid

4.0 Gaye,Marvin/What's Goin' On.1.mid

5.0 Johnny Cash/Folsom Prison Blues.mid

6.0 Stray Cats/Stray Cat Strut.1.mid

7.0 Bobby Brown/My Prerogative.1.mid

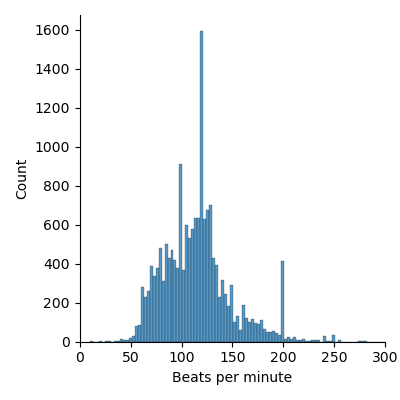

By default the tempo is assumed to be 120 beats per minute (bpm). As seen in the histogram below, the tempo ranges from 20 bpm to 300 bpm.

Files with very low tempos tend to be classical.

Dvorak/Symphony nr. 9 E-moll op. 85_II.Largo.mid

Ludwig van Beethoven/Pathetique.mid

Pink Floyd/Shine On You Crazy Diamond, Part Two.mid

There are few files with tempos close to 300.

Beastie Boys/Girls.mid

ZZ Top/Viva Las Vegas.mid

Dance music tends to be at the higher tempos.

Though most midi files play at a fixed tempo, some midi files may have as many as several thousand tempo changes. In such cases, small tempo adjustments are made in order that notes are quantized to a grid without losing the the musicians styling. In other cases, tempo adjustments are made to slow down the music at the end of the piece.

The dlakhdb spreadsheet lists the number of tempo declarations for each midi file. 60 files did not specify a tempo. 11,836 had just one tempo indication. The histogram of the tempo counts have a long tail. Here is a list of a few extremes.

The Cranberries/Pathetic Senses.md 11835

The Cranberries/Uncertain.mid 4961

The Cranberries/So Cold in Ireland.mid 3810

Midi files express time in pulse units where the number of pulses per quarter note (PPQN) is declared in the header of the file. The PPQN is usually divisible by 3 and powers of 2 in order to express the size of 32nd notes and triplets as a whole number. Common PPQN values are 120 and 240. The number of pulses per second is determined by the tempo command. By default, the tempo is set at 120 quarter notes per minute.

Midi files are created using music notation software or an electronic instrument such as keyboard or guitar. In the former case notes are aligned along quarter note boundaries and their position and duration are computed from their size. This makes it easy to get back to the music notation from the midi file. When the midi file originates from a midi instrument, the start and end of the note depends on how it is performed and will probably not line up exactly on a grid. Sorftware exists to quantize these notes into standard music notation lengths losing minimal quality.

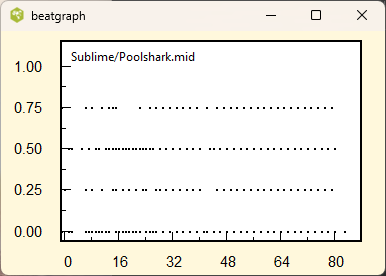

The above plots show the beatgraph for a quantized and unquantized midi files. The position of the note onset inside each beat is plotted as a function of the beat number. The music in the left plot is quantized. All the quarter, eighth, and sixteenth notes are properly aligned along a grid. The right plot was produced from an unquantized midi file. Dividing the file into equal quarter note beat intervals, the note onsets occur in random positions.

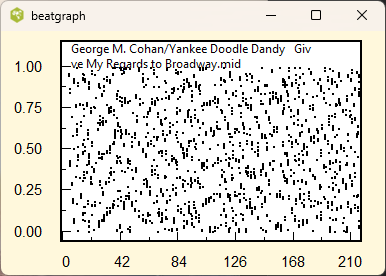

We can produce a note set histogram by computing the histogram of the note onset times modulo PPQN. (A number modulo n is the remainder when you divide the number by n.) If the midi file is quantized then, all the note onset times would occur at one of several specific times.

The above plots show the normalized note onset histograms (or distribution) for several midi files. The top left (Sublime/Poolshark) occurs when the music when the smallest note duration is a sixteenth note and there are no triplets. The top right is an example of unquantized note onsets. The lower left (Rionegro/Rodopiou) illustrates dithering that is applied to the quantized onsets. Performed notes do not occur at the same instant time allowing the listener to separate the distinct instruments. The lower right is an example of a distribution for music containing many triplets.

The midi files are categorized into 4 quantization types: c clean quantization (top left), d dithered quantization (bottom left), n no quantization (top right), and u unknown quantiztion. These files were categorized using a rule based heuristic classifier. The numberof files in each category are listed here.

c 11497

d 4862

n 471

u 346

Most of the midi files are either quantized or quantized with dithering. Many of the files that were categorized as unknown should have been labeled as dithered quantization. The detailed results are stored in the dlakhdb spreadsheet.

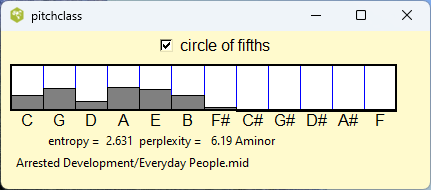

Most midi files do not specify the key signature of the music; however, in most cases it can be inferred from the distribution of the notes in the music. Here is an example of the pitch class distribution in the key of A minor.

Note that the notes in the horizontal scale increase in perfect fifth intervals. This makes the distribution more compact and easier to interpret. The lack of flat and sharp notes, indicates that this is either in the key of C or A minor. The fact that the peak occurs for the A note rather than C suggests that the music is in the key of A minor.

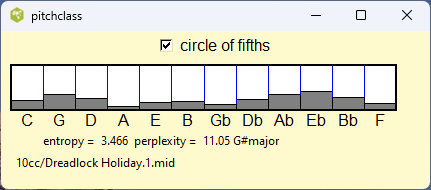

Not all pitch class distributions are as easy to interpret. For example, it is not uncommon for the music to modulate to another key. The pitch class distribution now reflects a mixture of two or more keys.

Note that in most of midi files, the chordal and rhythm accompaniment predominates over the melody line. Though the melody line provides most of the information in the music, the pitch class distribution reflects more of the accompaniment rather than the melody.

The most common key signatures are C, G, D, F, Amin, and Emin. There were about 1000 midi files for which the algorithm could not establish the key with much certainty. Those files usually contain a key change.

Nearly all the midi files in this collection have a homophonic texture. This means that the melody is contained in one of the channels, and the remaining channels provide chordal and rhythmic support. Of course there are many exceptions; for example, in jazz music the melody moves around the different instruments. In other music genres such as hip hop, the vocalist may sing the lyrics in more of a speaking voice, and the midi file may not have any melodic line.

The melodic line plays an important part in the midi file. The music is frequently not recognizeable without this line. In order to extract the characteristics of the midi files, it is useful to identify tracks (or channels) that carry the melody.

It is not difficult to identify the function of the track by examining the score. See the following samples below.

The melodic line is commonly played in the treble range, and the rhythm changes from bar to bar, and the notes tend to move in small steps with infrequent jumps. The melodic line is usually played solo.

Rhythmic and harmonic support is produced by a sequence of block chords. The rhythm tends to repeat from bar to bar, and the chords may be identical in each bar.

The bass line looks like a melody line, but it is played in a much lower octave.

The next step was to find a set of features that could be used to identify the melodic lines. One useful feature is the midi programs assigned to the channel. For example, the pan flute is used is frequently used to carry the melody. On the hand, the melody line is never played by slap bass. It is therefore one of the key features. midi program assignment was assumed to be an important feature.

Features were chosen based on the information exposed by the midiexplorer program. Databases of these feature values were created using the helper programs associated with midiexplorer. Here is a list of the features that we have chosen.

These parameters form a 6 dimensional vector space that can be used to separate the two classes (assuming little overlap). This is a simplification, since the context of these vectors is also important. Nevertheless it still useful to get a feel on how these parameters behave in the real data.

Python comes with a useful database package called pandas. This package allows one to extract many useful features with just a few lines of code. The database was reorganized into groups to separate the midi programs and melody status, using the groupby function. The above parameters were then estimated for each of the groups using aggregation functions.

Here is a table of the medians of these parameters separated by midi program and whether the channel was assigned as melody (T) or not (F). (The median rather than the average was used since it is less sensitive to outliers.)

The table exposes many interesting results:

Most of the midi programs have been used to play the main melody; however, about 20 midi programs were unsuitable. They include exotic sounds such as Bird Tweet, Helicopter, Seashore, Telephone ring, and etc. Percussion instruments such as Taiko Drum, Woodblock, and Reverse Cymbal are also excluded. Other midi programs such as the Alto Sax, Flute, Pan flute, and Vibraphone were favorites for presenting the melody. When those instruments appear there is a significant probability that the melody is in that channel. For example, 288 of the 569 channels which were assigned to the flute carried the melody.

With a couple of exceptions, the average pitch depended on only the midi instrument and was not correlated with whether the channel was a melodic channel or not. The main exceptions were the Electric bass, Synth Bass, Fretless Bass and a few other instruments. In most cases the average pitch for the Fretless Bass was around 37; if the average pitch was in the 60's then it was very likely that it was playing the melody line. The same applied with the other mentioned instruments.

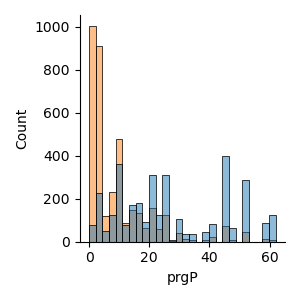

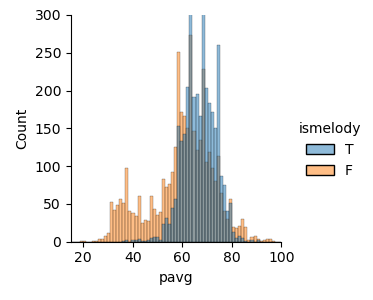

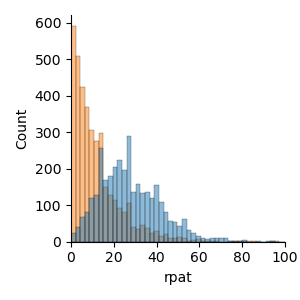

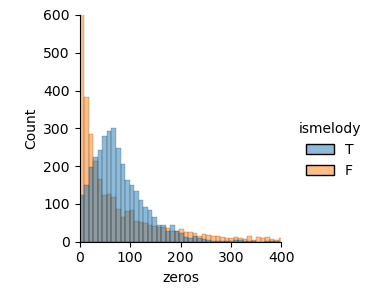

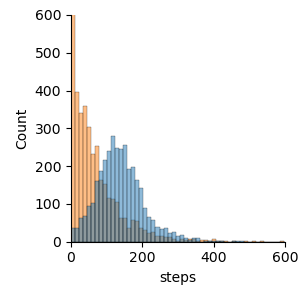

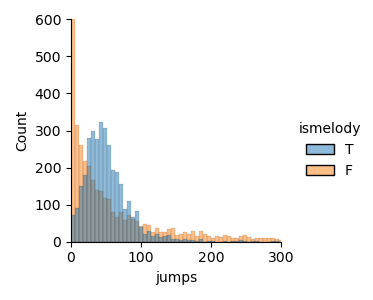

Histograms of the other variables are shown below. Most of the tracks or channels in the midi file are chordal and rhythmic support and do not contain the melody. For purposes of clarity, we thinned out the non-melodic channels so that they would not overload the histograms.

The histogram belonging to the melodic channels (light blue) and belonging to the non-melodic channels (orange) are overlain on the same figure. The overlapping regions are shown in grey. The prgP variable, is the percentage of the number channels over the total number channels for the different midi programs (1 to 128).

Though there is some separation between the two distributions, there is a a significant amount of overlap. Hopefully, there is more separation in the higher dimensional spaces, allowing for a classifier to distinguish the melodic and non-melodic channels.

It is fortunate that more than 2000 of the midi files in the Lakh Clean database label the the track or channel containing the main melody with the word MELODY or something similar. This represents only a small fraction of all the available tracks as most of tracks are used to provide either rhythmic or harmonic support. Therefore about 28,000 midi tracks could be identified as either melodic or non-melodic. Since this would cause a severe bias towards the non-melodic lines, only a small fraction of those tracks were used for training by applying random sampling. The collection of labeled tracks were split into training and test sets so that we could evaluate the accuracy of the classifier.

The Python scipy package comes with many methods for building a classifier. The linear discriminant classifier was chosen because it requires few parameters and it is easy to implement in any computer language. The value of the linear discriminant indicates the degree of confidence for its classification.

For the two class problem, the linear discriminant divides the feature space into two regions using a hyperplane. Like the Principle Components Analysis (PCA), the algorithm reduces the dimensionality of the space. Unlike PCA, the transform is chosen to maximize the separability between the two classes. There are numerous explanations on how the algorithm works on YouTube.

The linear discriminant analysis was applied to the 6th dimensional training vectors and evaluated on a test set of 1428 vectors. An accuracy in the neighbourhood of 85 percent was typically obtained for various test runs. For the non-melodic samples, the precision and recall values were 0.84 and 0.88. For the melodic samples, 0.85 and 0.81 values were obtained. The linear discriminant coefficients were

prgP 0.066

rpat 0.070

zeros -0.001

steps 0.123

jumps -0.006

pavg 0.038

Based on these values, the midi program, the number of rhythm patterns, and the number of steps were the most influential parameters in identifying the melodic tracks or channels.

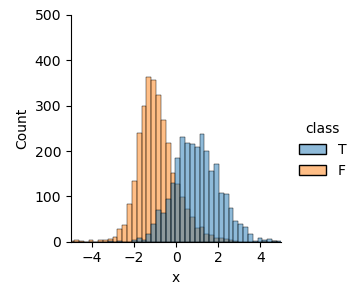

Here the two histograms of data samples projected along the decision axis.

The decision criterion ranges from -4 to +4. Unfortunately, there is a large overlap between the two classes limiting the expected accuracy. An accuracy of about 85 percent was obtained for both the training and test sets. The fact that both sets returned the same accuracy, implied that we would not gain more accuracy by obtaining a larger set of labeled tracks.

It was noted for particular set of tracks (channels) associated with a midi file, the linear discriminant value for the melodic track was frequently larger than for any other tracks. Therefore, we could design the classifier to designate the track with the highest discriminant to be the melodic track. As an additional precaution, we required that the average pitch value of the track exceeds 45 midi pitch units. Using this set of rules, the classifier had a false positive rate of 2.5 percent, but it missed the melody track 23 percent of the time.

This approach assumes that there is only one melodic track or channel in the file which is certainly not always true. Sometimes the melodic track is doubled to make it stand out. In other cases, in particular for jazz music, the melody shifts around the different instruments. Finally, some of the midi files, particular hip-hop, there is no melody track.

In conclusion, the linear discriminant finds the melody channel or track most of the time, but there are many exceptions.